Research Topics

Scalable Optimization Algorithms for Machine Learning Applications

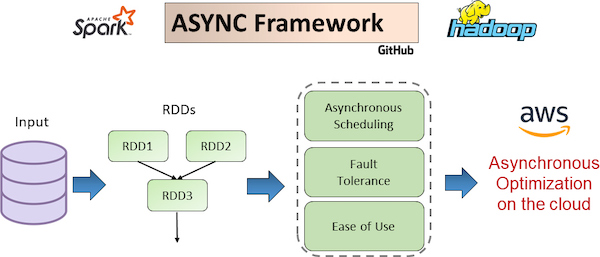

Applications that require running on a cluster consisting multiple CPUs or GPUs, face many challenges including high communication costs, slow resources and congestion. I develop algorithms and tools that tackles these problems in both theoretical and systems engineering aspects.

Approximate Matrix Algorithms

Kernel methods in statistical learning and scientific computing can cause challenges such as inverting large matrices or solving an high dimensional linear system. We develop efficient frameworks to approximate these computations while satisfying user-specific accuracies.

Quantized Deep Learning

We develop scalable optimization algorithms for training deep neural networks on distributed platforms. We design novel quantizated stochastic algorithms to reduce the communication cost of transferred bits among GPUs in a cluster. We adpot data parallelism and MPI communication to build an effiecient message passing while preserving convergence rates.